Asset Lifecycle Management

Data center decommissioning

The only way to truly protect your data

Safe, secure, and sustainable data center decommissioning

When the time comes to move or retire data-bearing IT assets from your data centers, it’s absolutely critical to securely and efficiently decommission those assets.

Featured resource

Solution Guides

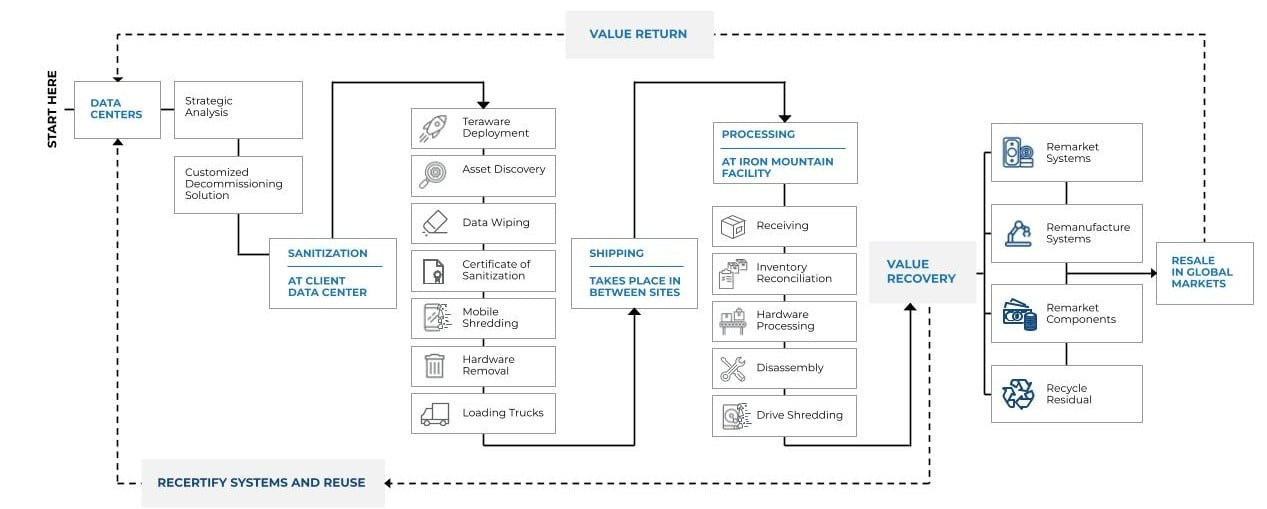

Iron Mountain's holistic approach to data center decommissioning

The world's leading cloud service providers, data centre operators, and enterprises partner with Iron Mountain to unlock untapped potential and value from their outbound hardware. Learn more about our integrated approach that protects your data, maximises your financial returns and reduces environmental impact

How we can help

Reduce disruption, minimize environmental impacts, and increase recovery value by comprehensively sanitizing every data-bearing device. Decommission even the largest data centers up to 10 times faster, and virtually eliminate the risk of data loss.

IT asset remarketing

Recover maximum value and drive return on investment (ROI) with Iron Mountain’s industry-leading asset remarketing capabilities.

Environmentally responsible dispositioning

Ensure all decommissioning activities adhere to industry best practices and regulatory compliance for environmental impact and Occupational Health and Safety (OHS).

Secure chain of custody

Identify and track every serialized asset with multiple checkpoints and secure logistics, from client sites to world-class processing centers.

A deeper dive into our data center decommissioning process

What we process

Servers

Storage systems and devices

Networking gear

Computer tapes

Racking

Related resources

View More Resources

Whitepaper

Data center decommissioning: Hyperscale-grade audit compliance checklist

From erasing and shredding drives to recycling or reselling racks, servers, and other equipment, Iron Mountain maintains a fully traceable audit trail – ensuring end-to-end compliance during data center decommissioning.

Premium

PremiumWhitepaper

Data center decommissioning best practices report

For data center operators, expert decommissioning is a must. Remaining a leader in technology innovation and efficiency means frequently refreshing both hardware and infrastructure, even as complexity and variety of those refreshes continue to grow.

Contact us

Contact us

Fill out this form and an Iron Mountain specialist will contact you within one business day.

Login and bill pay

Log in to your account or learn how to create one.

Support center

Our Customer Support Center can help provide you with the quickest answers to your questions.

Call sales

Connect with one of our knowledgeable representatives to address your specific solution needs.